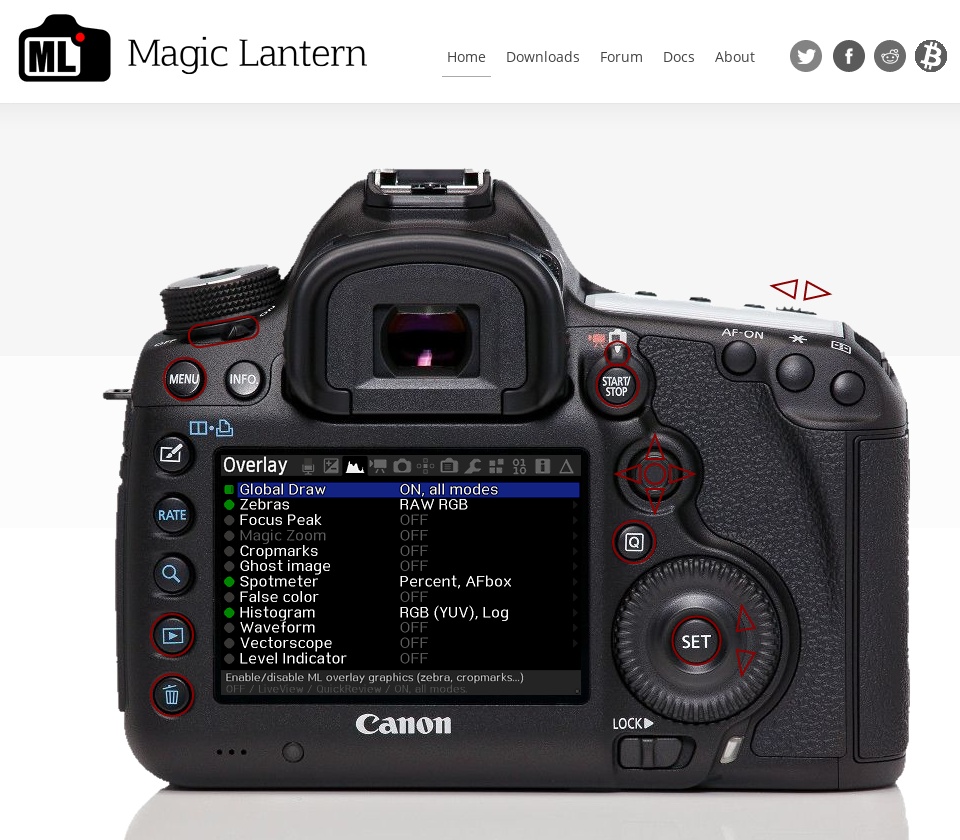

While preparing the application to Software Freedom Conservancy (see Applying for fiscal hosting for context), I was a bit surprised to find quite a few academic works mentioning Magic Lantern. I already knew about 2 papers mentioning Dual ISO, but apparently our software can be useful beyond the typical camera usage.

https://scholar.google.com/scholar?q=magiclantern.fm

https://twitter.com/autoexec_bin/status/1346864496338415616

As such, today I've moved previous discussions and experiments related to scientific papers into this newly created Academic Corner - hoping something good might come out of this eventually.

I know, I know, too little, too late...

https://scholar.google.com/scholar?q=magiclantern.fm

https://twitter.com/autoexec_bin/status/1346864496338415616

As such, today I've moved previous discussions and experiments related to scientific papers into this newly created Academic Corner - hoping something good might come out of this eventually.

I know, I know, too little, too late...