UPDATED 2024-01-23

Hello,

Just released a new focus sequencing feature allowing us to create and manage a list of focus point created via autofocus with specific transition durations, then easily replay the sequence step-by-step while recording a video, using a single button push.

Demonstration video:

Documentation:

The complete documentation (explaining both why it was a challenge regarding the lens calibration and how to use the feature) is available online using this markdown link.

Download link:

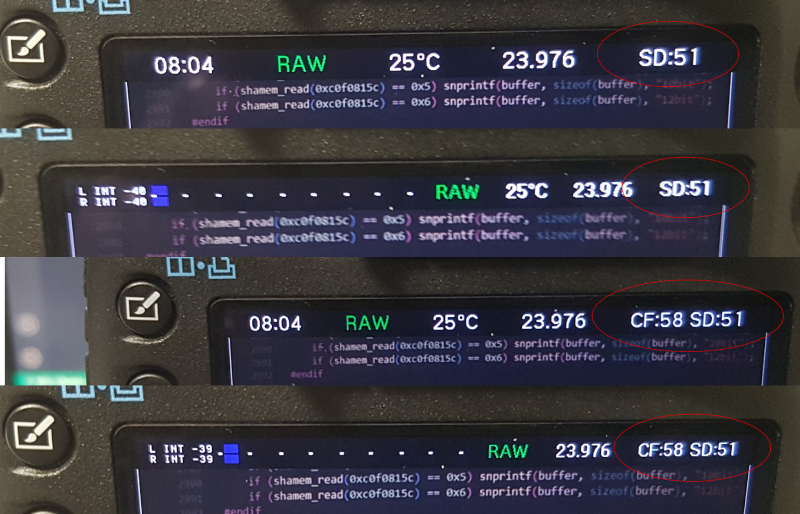

5D3 users, 1.1.3 & 1.2.3 pre-builds are available in this GitHub repository, which is basically an up-to-date fork of Danne's repository, including all the modules but also the ultrafast framed preview feature and the SD/CF dual slots free space display.

FAQ:

- does this replace the previous cinematographer module?

yes, it's a complete replacement of it, including a way more user-friendly GUI, a lens calibration process and above all an autofocus-based sequence creation which was not possible before

- why is it running on a 5D3 only as it's mainly a ML module? does a port to other cameras possible?

basically, because a change in the lens.c ML code was required first to get a reliable lens_focus function used by the focus_seq module (explained in the documentation)...

this is why it's currently embedded in the only specific Magic Lantern GitHub repository that provides this function, anyway as the change doesn't impact yet the legacy code a port in other repos for other cameras remains highly possible (pending)

Greetings:

Thanks a lot to @names_as_hard for code review, support & advices, much appreciated!

23-Jan-2024 update:

Following a user (@bigbe3) comment on YouTube, I just fixed the module to deal properly with lenses that doesn't report the focus distance and/or that are stucked at initial calibration step due to a missing forward lens limit detection, typically like the Canon EF 50mm f/1.8 II: the download link above was updated accordingly.

Hello,

Just released a new focus sequencing feature allowing us to create and manage a list of focus point created via autofocus with specific transition durations, then easily replay the sequence step-by-step while recording a video, using a single button push.

Demonstration video:

Documentation:

The complete documentation (explaining both why it was a challenge regarding the lens calibration and how to use the feature) is available online using this markdown link.

Download link:

5D3 users, 1.1.3 & 1.2.3 pre-builds are available in this GitHub repository, which is basically an up-to-date fork of Danne's repository, including all the modules but also the ultrafast framed preview feature and the SD/CF dual slots free space display.

FAQ:

- does this replace the previous cinematographer module?

yes, it's a complete replacement of it, including a way more user-friendly GUI, a lens calibration process and above all an autofocus-based sequence creation which was not possible before

- why is it running on a 5D3 only as it's mainly a ML module? does a port to other cameras possible?

basically, because a change in the lens.c ML code was required first to get a reliable lens_focus function used by the focus_seq module (explained in the documentation)...

this is why it's currently embedded in the only specific Magic Lantern GitHub repository that provides this function, anyway as the change doesn't impact yet the legacy code a port in other repos for other cameras remains highly possible (pending)

Greetings:

Thanks a lot to @names_as_hard for code review, support & advices, much appreciated!

23-Jan-2024 update:

Following a user (@bigbe3) comment on YouTube, I just fixed the module to deal properly with lenses that doesn't report the focus distance and/or that are stucked at initial calibration step due to a missing forward lens limit detection, typically like the Canon EF 50mm f/1.8 II: the download link above was updated accordingly.