@allemyr everything you asked is on "test methodology" section. Just read, please.

- Welcome to Magic Lantern Forum.

News:

Etiquette, expectations, entitlement...

@autoexec_bin | #magiclantern | Discord | Reddit | Server issues

This section allows you to view all posts made by this member. Note that you can only see posts made in areas you currently have access to.

#1

Post-processing Workflow / Re: Youtube Encoding: Upscaling to 4K before uploading doesn't work

August 23, 2018, 07:50:49 PM #2

Share Your Videos / Re: 24 Hours In Paris - 5D Mark III - 60fps Raw

August 21, 2018, 06:14:23 AM

Nice. Liked the sound design.

#3

Raw Video / Re: Advise on choice: 5Od, 7d, eos m

August 18, 2018, 02:56:02 AMQuote from: stickFinger on August 18, 2018, 12:29:20 AM

Interesting. Despite of adding around if 300 bucks to the whole package the results seem beautiful 😊

I must confess that I'm very interested on trying some different vintage lenses eg. Canons FD, c mount and even some pl mounts. That's why I consider de Eos M.

Have you ever used one?

Not the same guy, but, my recommendation: stay way from FD's. They need a optical adapter to work on EF mount and these adapters generally add chromatic aberrations and other optical problems.

Nikkor lenses, on the other hand, work very well. I use currently a 55mm f/1.2 Nikkor-S.C, it's a very good lens.

C mount and PL mount lenses are too expensive, probably not worth it.

#4

Post-processing Workflow / Re: Youtube Encoding: Upscaling to 4K before uploading doesn't work

August 17, 2018, 03:38:31 AMQuote from: trailblazer on August 16, 2018, 08:53:38 PM

Been lurking for a long time, just registered to post this, I just can't really understand what's going on here.

Can you send me the original file you uploaded? I can do the test again, using your files, if you want. It needs to be without interpolation, without sharpening and in a format without much lossy compression, like ProRes or DNxHR.

#5

Post-processing Workflow / Re: Youtube Encoding: Upscaling to 4K before uploading doesn't work

August 17, 2018, 03:35:58 AMQuote from: allemyr on August 16, 2018, 12:40:15 PM

I know you can download with different quality settings with youtube-dl, this is set to "bestvideo" in command prompt and this is the videos that are streamed when viewing the highest quality avaiblie on Youtube.

The "-f best" will download the highest quality and that's not what we are testing here. We are testing 1080p vs 1080p, not 1080p vs 4K...

Quote

If you want 50mm1200s you can upload the files you downloaded from Youtube with youtube-dl, please do that i guess they wont be bigger then 200mb each.

Why? You can download with youtube-dl. It will be the same file. Here's the SHA256 (same files used on the test before transforming to raw YUV):

Non-Interpolated H.264: 9ACAB309BA66A05D4B8F8D592C2CF6CC00C1817F6CD4D5F6EDCF977A7F9DD45D

Interpolated H.264: 9B271ACD4833E9F3BEFBCAE05D8432CFEADD18833F361E5E1461B3CB87DCF8AF

Interpolated VP9: D501E3DCF287EFADB9A9A5C661C178DC7A8341E60E7625424DC14B2E00A888D1

Quote

I know raw YUV420p tends to be very big especially at UHD resolution, but can you please provide us here a short version of that here maybe just 3-10 frames instead of 250 or similar. My UHD 250 frame raw YUV420p file was 8gigabyte so I understand that thats hard to share.

Here's the original DNxHR:

https://drive.google.com/file/d/1h8w-jbb6mhEnDxw3qsaGxwOcmDxWPrTQ

You can convert the original file to raw YUV420 using ffmpeg:

Code Select

ffmpeg -i input.mov -c:v raw -pix_fmt yuv420p out.yuv

Quote

I dont want to go to much further, but even if the quality is not equally good in your video I see the same difference between Youtube UHD and Youtube 1080p,

That's because you're probably comparing 1080p with 4K, so the resizing done by your browser adds some sharpening. This doesn't mean the compression is better...

You cannot compare using SSIM with different resolutions (it needs to match the reference footage), but I could try to download the 3840x2160px VP9 (format code 313) and resize it to 1920x1080p as the browser would do through youtube player in a fullscreen FullHD display. Not sure if this would work.

#6

Post-processing Workflow / Re: Youtube Encoding: Upscaling to 4K before uploading doesn't work

August 17, 2018, 02:56:35 AMQuote from: Audionut on August 16, 2018, 06:31:18 AM

I've added some code to your post to reduce the image sizes until the images are clicked.

Nice work. I hope to have some more time in the future to add further comment.

Regards.

Thanks. Feel free to change anything if you want, I'm sure there's some typos there (english is not my first language).

#7

Raw Video / Re: Building A Camera From Scratch, Converting the Raw Data to an Image

August 15, 2018, 05:57:28 PM

See the Axiom project: https://www.apertus.org/axiom

#8

Raw Video Postprocessing / Re: Making a New MLV Processing App! [Windows, Mac and Linux]

August 14, 2018, 12:50:23 PMQuote from: masc on August 14, 2018, 10:16:33 AM

With this darkframe crash some light comes in the dark for me... on Windows only, memory allocation does not work as expected. See issue #96 on our github repos.

Ha! Told you it was malloc()

If you need to test some patch, I will be here.

#9

Post-processing Workflow / Re: Youtube Encoding: Upscaling to 4K before uploading doesn't work

August 14, 2018, 12:41:00 PMQuote from: tonij on August 14, 2018, 11:32:10 AM

Screenshot of both videos at 1080p,

Upscaled upload looks way better?

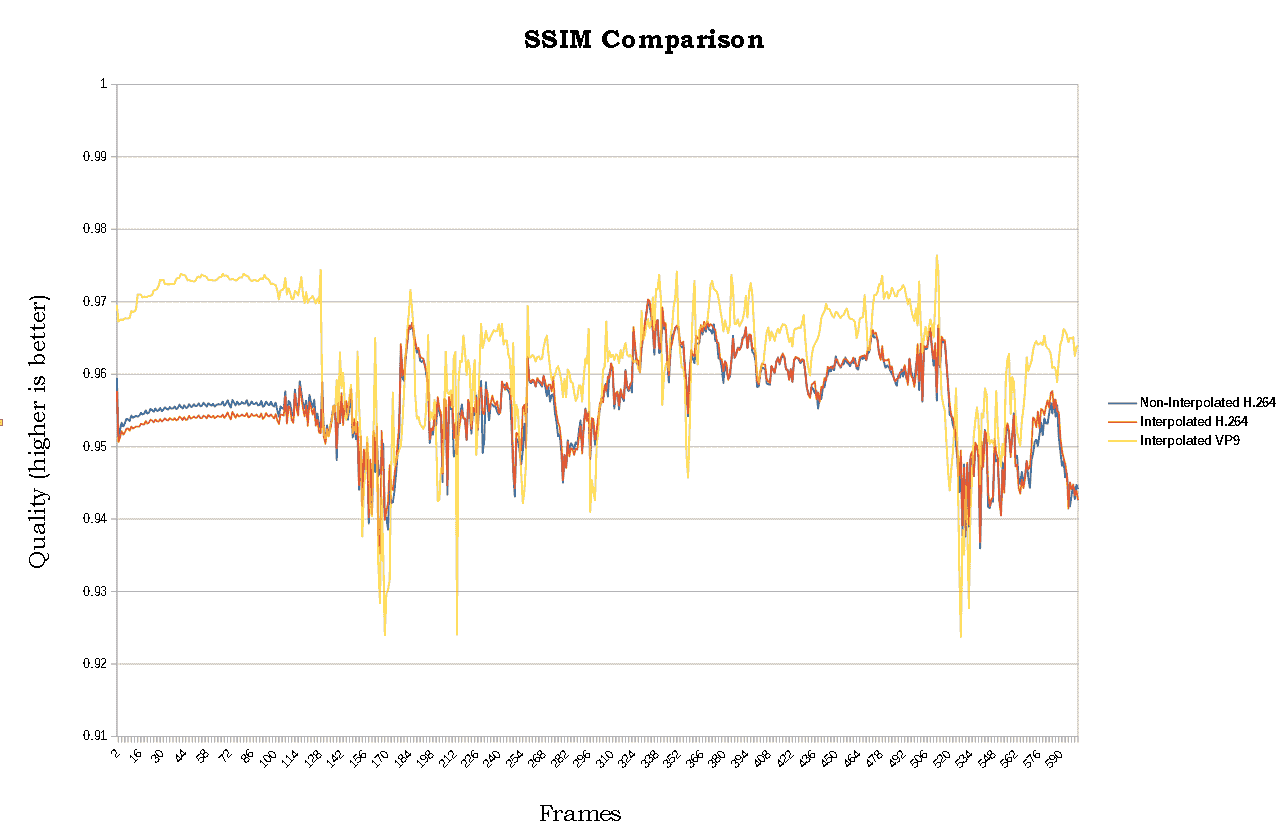

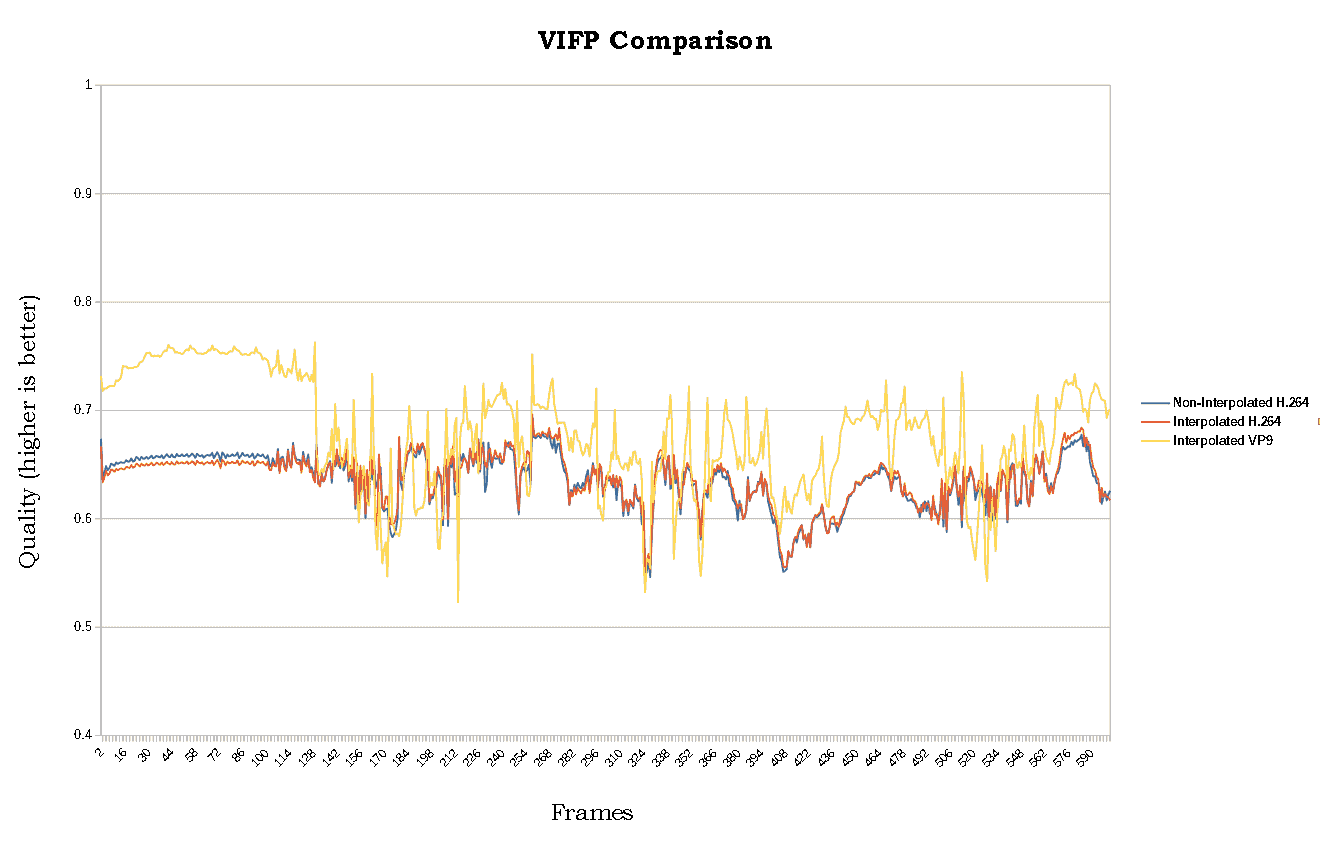

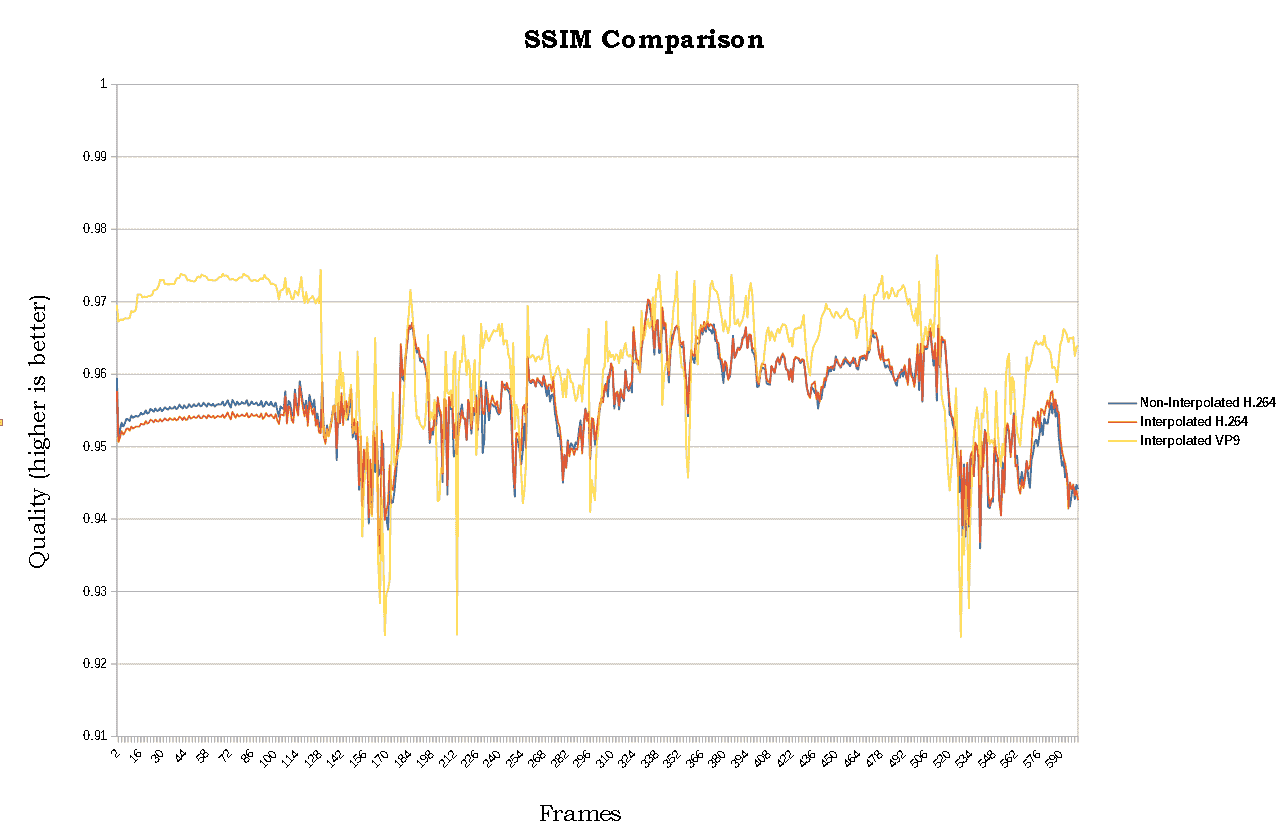

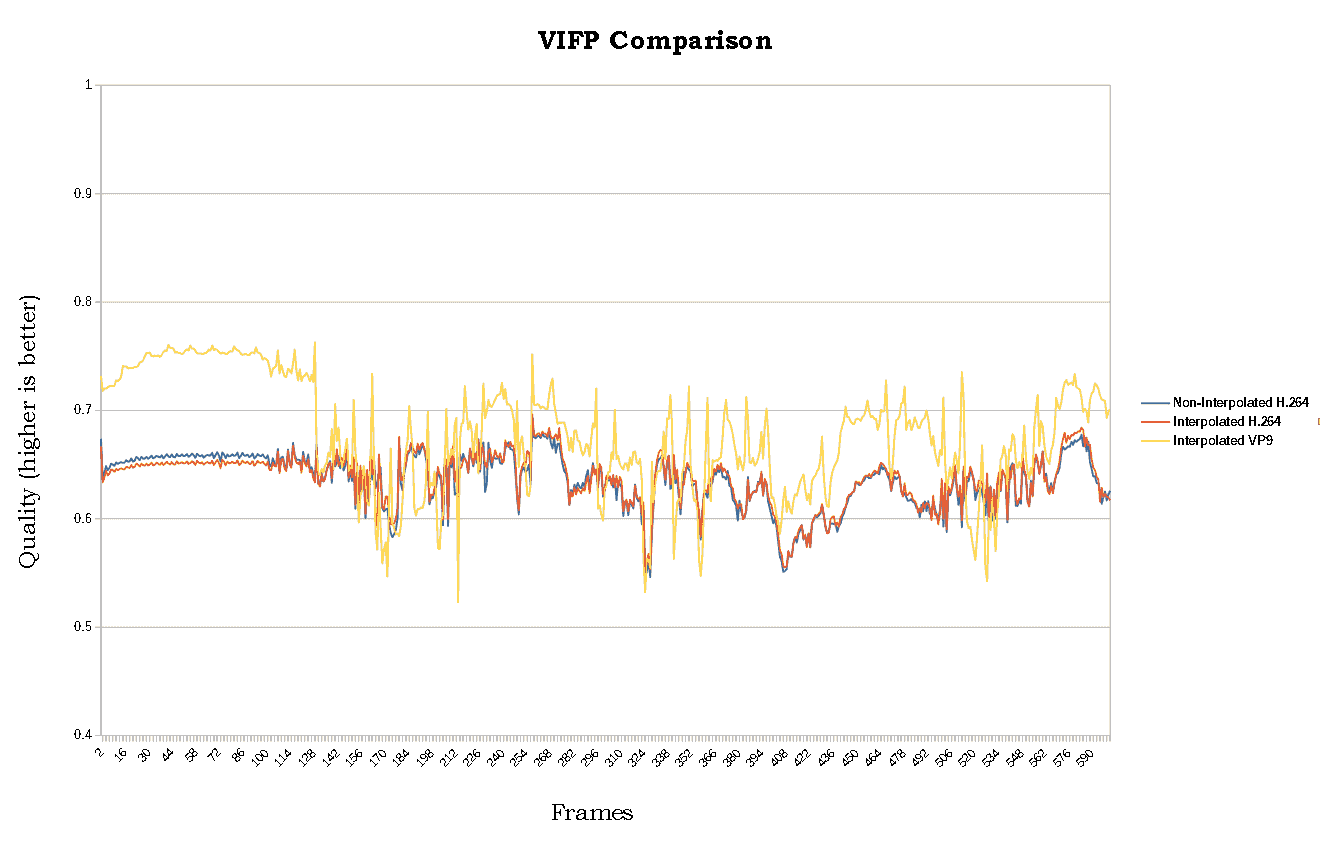

See the chart. The static image scores higher on all metrics for VP9, but the movement is down on all metrics too. The average difference between the two is 0.15% on MSSSIM, this is not perceptual in moving images (we can only perceive this looking on a single frame, not on 24fps).

Also, your screen shot is not the correct way to test it, as you don't know if youtube is using H.264 or VP9, since youtube adapts the format and resolution according with your network speed (using DASH - Dynamic Adaptive Streaming over HTTP). The higher perceived quality can also be from the interpolation added sharpness.

Quote from: masc on August 14, 2018, 12:00:59 PM

But this way you upscale also the artefacts coming from conversion in point 2?!

Yes, but DNxHR has virtually no artifact due to high bitrate. As I said on step "3", I tried to keep the 'normal' workflow as the average user would do (upscaling using Premiere). Another point: if you upscale before editing, you'll need much more space to store each sequence. Even more impractical then just upscaling after the finished project.

Quote

I think this way the upscaled version can't be better (until this step).

The file will be re-encoded by Youtube anyway. The rationale behind this "upscaling to 4k" idea is that YT converts the "4k" file directly to VP9 instead of low-bitrate H.264. But, as the results show, the perceptual quality is very little. The biggest difference was on averaged VIFP, with 4.18%.

Quote

What happens if you resize in MLV App?

I can test again, but the results will be the same...

#10

Post-processing Workflow / Youtube Encoding: Upscaling to 4K before uploading doesn't work

August 14, 2018, 05:33:59 AM

TL;DR: Upscaling to achieve better youtube quality is a waste of time.

Following previous discussion, I decided to do my own tests since I couldn't find any good article about this.

The hypothesis: upscaling 1080p videos to UHD (3840x2160px) before uploading to youtube increases the video conversion quality for 1080p.

The argument for this is that youtube uses VP9 codec for resolutions higher than 1080p, instead of H.264.

Test Methodology

1- 20s video using 50D MLV with resolution of 1920x1080px

2- Processed with MLVApp (no sharpness or denoise filter) and exported to DNxHR HQ 8bit. This file was used as the reference file.

3- Interpolated the reference file to 3840x2160px on Premiere Pro v12.0.0 (with "Use Maximum Render Quality" box enabled) and exported to DNxHR HQ 8bit. I didn't wanted to use some better interpolation algorithm, like NNEDI3 or SuperResolution-based. Most people just use Premiere or FinalCut to do this.

4- Uploaded both and waited for youtube conversion. Note: youtube is now accepting direct DNxHR uploads.

5- Downloaded with youtube-dl using format code 137 (standard for 1080p H.264 files) and 248 (standard for 1080p VP9 files). Youtube-dl is a python script that gets the original files from googlevideo server, so there's not conversion in-between.

6- Converted to raw YUV420p using ffmpeg

7- Analyzed with VQMT tool (600 frames), to get MSSSIM, SSIM and VIFP values. Output to CSV.

8- Graphs using LibreOffice Calc

Non-Interpolated link: https://youtu.be/HtR-hJe-2Wo

Interpolated link: https://youtu.be/JMbeoiblla8

Note

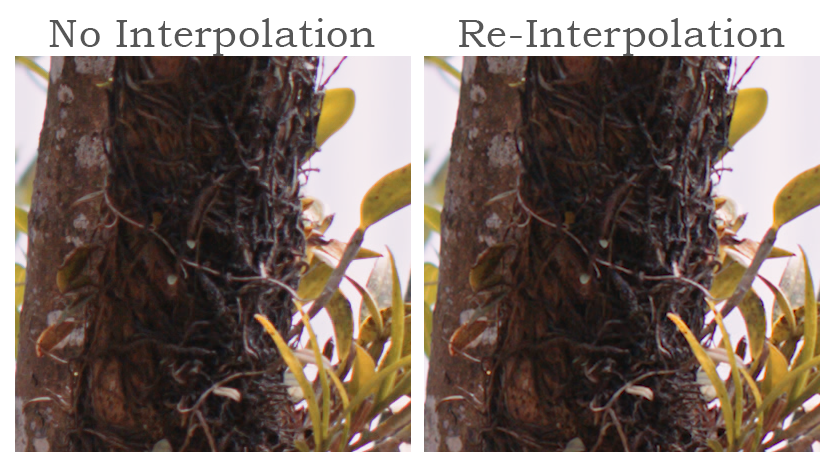

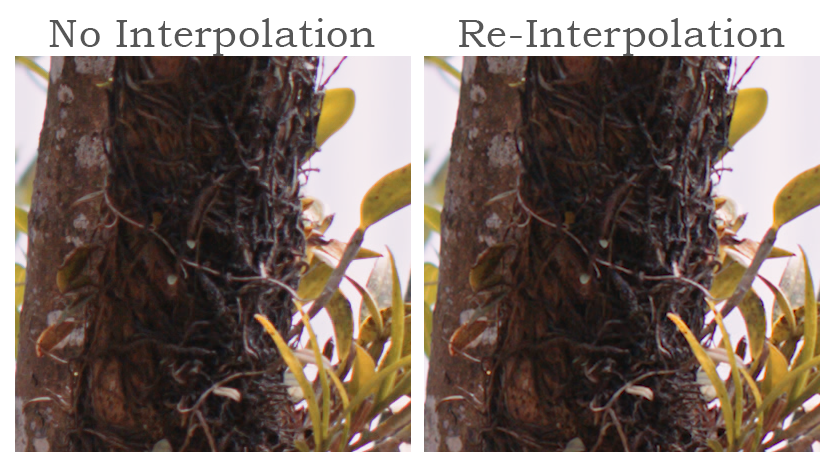

Interpolation adds artifacts and downscaling can add what seems to be sharpness. As you can see in the example bellow, the "re-interpolated" crop (using Bicubic algorithm), seems to be sharper. This can affect the test, but I did not applied any sharpness on any of the files.

Results

Averaged from 600 frames. The closer to 1.0, the better:

Averages

MSSSIM:

Non-Interpolated H.264: 0.989555

Interpolated H.264: 0.989429

Interpolated VP9: 0.991146

SSIM

Non-Interpolated H.264: 0.956130

Interpolated H.264: 0.956211

Interpolated VP9: 0.962942

VIFP:

Non-Interpolated H.264: 0.635854

Interpolated H.264: 0.636947

Interpolated VP9: 0.677739

Percentage difference between the Non-Interpolated H.264 and the Interpolated VP9 averages:

- SSIM: 0.68%

- VIFP: 4.18%

- MSSSIM: 0.15%

Conclusion

The difference is placebo or induced by the added sharpness from the re-interpolation.

Besides wasting time, processing power and network bandwidth, you'll generate interpolation artifacts (I noticed some distortion in fine details on shadows). VP9 has a better compression ratio than H.264, this doesn't mean youtube keeps the same bitrate as H.264. In fact, that's the point of the lossy compression research: less data for the same perceptual quality, so you need less storage and load the video faster.

Haven't tested with HDR uploads (10-bit, Rec.2020).

Following previous discussion, I decided to do my own tests since I couldn't find any good article about this.

The hypothesis: upscaling 1080p videos to UHD (3840x2160px) before uploading to youtube increases the video conversion quality for 1080p.

The argument for this is that youtube uses VP9 codec for resolutions higher than 1080p, instead of H.264.

Test Methodology

1- 20s video using 50D MLV with resolution of 1920x1080px

2- Processed with MLVApp (no sharpness or denoise filter) and exported to DNxHR HQ 8bit. This file was used as the reference file.

3- Interpolated the reference file to 3840x2160px on Premiere Pro v12.0.0 (with "Use Maximum Render Quality" box enabled) and exported to DNxHR HQ 8bit. I didn't wanted to use some better interpolation algorithm, like NNEDI3 or SuperResolution-based. Most people just use Premiere or FinalCut to do this.

4- Uploaded both and waited for youtube conversion. Note: youtube is now accepting direct DNxHR uploads.

5- Downloaded with youtube-dl using format code 137 (standard for 1080p H.264 files) and 248 (standard for 1080p VP9 files). Youtube-dl is a python script that gets the original files from googlevideo server, so there's not conversion in-between.

6- Converted to raw YUV420p using ffmpeg

7- Analyzed with VQMT tool (600 frames), to get MSSSIM, SSIM and VIFP values. Output to CSV.

8- Graphs using LibreOffice Calc

Non-Interpolated link: https://youtu.be/HtR-hJe-2Wo

Interpolated link: https://youtu.be/JMbeoiblla8

Note

Interpolation adds artifacts and downscaling can add what seems to be sharpness. As you can see in the example bellow, the "re-interpolated" crop (using Bicubic algorithm), seems to be sharper. This can affect the test, but I did not applied any sharpness on any of the files.

Results

Averaged from 600 frames. The closer to 1.0, the better:

Averages

MSSSIM:

Non-Interpolated H.264: 0.989555

Interpolated H.264: 0.989429

Interpolated VP9: 0.991146

SSIM

Non-Interpolated H.264: 0.956130

Interpolated H.264: 0.956211

Interpolated VP9: 0.962942

VIFP:

Non-Interpolated H.264: 0.635854

Interpolated H.264: 0.636947

Interpolated VP9: 0.677739

Percentage difference between the Non-Interpolated H.264 and the Interpolated VP9 averages:

- SSIM: 0.68%

- VIFP: 4.18%

- MSSSIM: 0.15%

Conclusion

The difference is placebo or induced by the added sharpness from the re-interpolation.

Besides wasting time, processing power and network bandwidth, you'll generate interpolation artifacts (I noticed some distortion in fine details on shadows). VP9 has a better compression ratio than H.264, this doesn't mean youtube keeps the same bitrate as H.264. In fact, that's the point of the lossy compression research: less data for the same perceptual quality, so you need less storage and load the video faster.

Haven't tested with HDR uploads (10-bit, Rec.2020).

#11

Hardware and Accessories / Re: Which 24-70 for video with 5D3 (considering binning!)

August 14, 2018, 12:12:23 AMQuote from: adrjork on August 13, 2018, 11:48:43 PM

Might you be more specific? Any specific model, for example?

(Thanks)

I'm not a specialist on this subject, but I heard the old Distagon from Zeiss (the 25mm f/2.8) and the Elmarit-R from Leitz (24mm f/2.8) are the good ones.

These two companies produced and still producing the best optics in the world. Anything produced by then for professional photography/cinematography in the last 50 years is top quality. Zeiss has a contract with Arri (arguably the best comercial cinema camera producers today). The Leica division that produces the optics, Leitz, designed the Primo series for Panavision. Summicron lenses from Leica is known to have the best sharpness and contrast ever (for spherical, in the anamorphic area it's the G-series from Panavision).

#12

Hardware and Accessories / Re: Which 24-70 for video with 5D3 (considering binning!)

August 13, 2018, 04:42:44 PMQuote from: adrjork on August 12, 2018, 10:04:06 PM

Unfortunately I can't go for vintage lenses right now, because I started a feature project months ago with modern lenses

Well, if you want to use manual lens in the future, then I suggest you buy a cheap 24-70 for now. Don't waste money in some fancy canon lens.

Quote

Anyway, for future projects, I'm surely interested into testing vintage lenses.

Takumar 55mm could be one, but also Helios 58mm 44-2, and Jupiter 9 85mm.

For 24mm and 35mm, any vintage advice?

Back in the day, Zuiko (OM series) did some good wide-angle lenses.

Of course, if you want great quality you'll have to spend more money on old Zeiss or Leica lenses...

#13

Hardware and Accessories / Re: Which 24-70 for video with 5D3 (considering binning!)

August 12, 2018, 02:27:53 PM

Canon is better on all MTF measures. I don't know about chromatic aberration, vignette and distortion, but the "L" series from Canon usually has very little of that. But, there's a difference of $400 between the two, so...

I would recommend, though, you look into old primes. Canon lenses have this "digital-look" and I personally don't find it suitable for video. With $1200 you could buy a neat Takumar 50mm f/1.4 for about $300, a Rokinon Cine DS DS24M-C 24mm T1.5 for $700, and a Black ProMist 1/8 filter to suppress a bit the "digital-look" of the Rokinon (for $83)...

Much better choices, in my opinion.

I would recommend, though, you look into old primes. Canon lenses have this "digital-look" and I personally don't find it suitable for video. With $1200 you could buy a neat Takumar 50mm f/1.4 for about $300, a Rokinon Cine DS DS24M-C 24mm T1.5 for $700, and a Black ProMist 1/8 filter to suppress a bit the "digital-look" of the Rokinon (for $83)...

Much better choices, in my opinion.

#14

Share Your Videos / Re: My DOP demo reel - 90% ML Raw - 5D MarkIII

August 10, 2018, 11:19:23 AM

Damn, nice color grading. The scene at 0:22 seems like Alexa (the flat highlights). Do you care to share your workflow for this specific scene? Really liked it.

#15

Share Your Videos / Re: commercial for Halliburton canon 5d mark iii raw

August 10, 2018, 11:10:53 AM

Very nice!

#16

Hardware and Accessories / Re: Jammed focusing on old lens (any advice)

August 08, 2018, 05:59:12 PM

Does it have a adapter with correction lenses? FD mount, IIRC, needs a correction element between the sensor and the lens, to make it focus in the infinity.

#17

Share Your Videos / Re: The 7D still competing!

August 08, 2018, 02:55:03 AMQuote from: allemyr on August 07, 2018, 08:33:15 PM

Can't you check other test, do you have to do a statement that you will do a test in a thread, and then never do it, just check out what others do instead?

You might don't do these tests, i don't know but

Burden of proof. You guys have been pushing this upscaling thing for months now, you should prove it. I'm trying to find some rational explanation for it, and the data until now does not follow what you are saying.

This doesn't seem a big deal, but you might be spreading bullshit and affecting the workflow of many people. Uploading upscaled videos not just will take much more time to render, but also requires more space consumption and network bandwidth. For professionals working with this platform it is very relevant.

You're in a forum to discuss rationally, not just to show off and do small talk.

Nevermind.

#18

Hardware and Accessories / Re: EOS M lens suggestions

August 08, 2018, 02:35:52 AM

Buy high apperture manual prime lenses. Helios 44-2 is cheap and good.

#19

Share Your Videos / Re: The 7D still competing!

August 07, 2018, 12:41:38 PMQuote from: IDA_ML on August 07, 2018, 11:29:19 AM

Give it a try for yourself and you will see.

Quote from: allemyr on August 07, 2018, 11:46:45 AM

Just take a look yourself between your UHD and 1080p upload, 1080p on youtube is quite terrible for most people.

Ok, I'll see if I can do some tests. This article tested it and, based on their images, the upscaled is in fact better. But, I downloaded the files using youtube-dl (the same format code, 137) and, examining with ffprobe, the upscaled has less bitrate than the normal (1299 vs 2643 kb/s). I think this guy actually applied a sharpening filter after the interpolation, that's why it looks better.

I find it pathetic that Google does not release more options to professional videomakers using their platform, as many people use it for professional works, including high-end industry (cinema and advertising). I expect AV1 codec to solve that, but it will probably be delayed for more 5 years.

PeerTube could be a thing already

#20

Share Your Videos / Re: The 7D still competing!

August 07, 2018, 09:33:32 AM

I'm not sure about this upscaling idea... does anyone have a good argument for it?

Using youtube-dl, you can see a increase of 4.2x on the bitrate, comparing the 1080p in h.264 vs the vp9 "4k". But, the resolution is 4 times bigger (not really, just interpolated). Supposing it's linear, you only gain 0.2 MB/s on bitrate. But, it's not linear, as the codec is different (h.264 vs vp9). I don't know if this can be calculated only using bitrates.

On the other hand, the audio quality is for sure much better. Youtube is using opus codec for audio encoding and this can only be used while playing with webm from the DASH manifest, as the normal (non DASH) webm uses vorbis instead of opus.

The YT blog explains some stuff about how the videos are processed, but there's nothing about this upscaling thing.

I think if you use a good interpolation algorithm, like Spline64 or these crazy stuff like Nnedi3, it might be a good idea to upscale, but I don't think this is worth all the additional computational power and bandwidth you'll need to process and upload.

As I work with youtube to upload videos of my clients, this information is important to me. If anyone has any real data (not just the empirical "it's much better duude!!1!"), please link here.

Using youtube-dl, you can see a increase of 4.2x on the bitrate, comparing the 1080p in h.264 vs the vp9 "4k". But, the resolution is 4 times bigger (not really, just interpolated). Supposing it's linear, you only gain 0.2 MB/s on bitrate. But, it's not linear, as the codec is different (h.264 vs vp9). I don't know if this can be calculated only using bitrates.

On the other hand, the audio quality is for sure much better. Youtube is using opus codec for audio encoding and this can only be used while playing with webm from the DASH manifest, as the normal (non DASH) webm uses vorbis instead of opus.

The YT blog explains some stuff about how the videos are processed, but there's nothing about this upscaling thing.

I think if you use a good interpolation algorithm, like Spline64 or these crazy stuff like Nnedi3, it might be a good idea to upscale, but I don't think this is worth all the additional computational power and bandwidth you'll need to process and upload.

As I work with youtube to upload videos of my clients, this information is important to me. If anyone has any real data (not just the empirical "it's much better duude!!1!"), please link here.

#21

Share Your Videos / Re: 5D Mark III - Shutter Social (REC2020 HDR10)

August 07, 2018, 03:38:08 AMQuote from: djvalic on August 07, 2018, 01:45:14 AM

I set input to Rec709

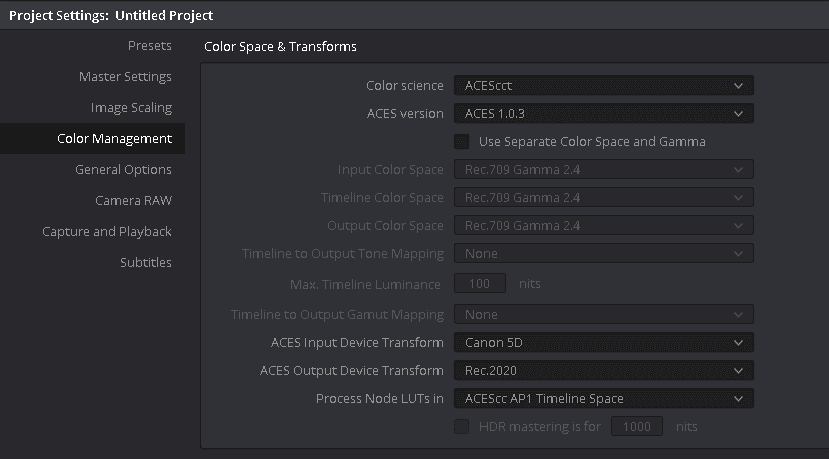

This is what is wrong.

You're compressing eveything to a small color space, then expanding it to a larger one.

Convert MLV to CinemaDNG. Go to Resolve and configure it like this image bellow. Do the color grading and export to ProRes 422. I suggest you don't make these "teal and orange" forced gradings, as its very ugly visually, especially if you use some LUT to do it automatically.

#22

Raw Video Postprocessing / Re: Making a New MLV Processing App! [Windows, Mac and Linux]

August 04, 2018, 11:39:30 PMQuote from: masc on August 04, 2018, 07:44:01 PM

Note that openmp version is still buggy!!! That is why next version is not yet released. But this next official version will have openmp! First tests on my old 2010 MacBook bring 30-40% more speed, when using "AMaZE cached" also up to 60% more speed than in last version. But here and there it is still tricky in the code...

This might be useful: TaskSanitizer

Quote

TaskSanitizer implements a method to detect determinacy races in OpenMP tasks. It relies on open-source tools and is mostly written in C++. It launches through a custom Bash script called tasksan which contains all necessary command-line arguments to compile and instrument a C/C++ OpenMP program. Moreover, it depends on LLVM/Clang compiler infrastructure and contains a compiler pass which instruments the program undertest to identify all necessary features, such as memory accesses, and injects race detection runtime into the produced binary of the program. Race detection warnings are displayed on the standard output while the instrumented binary executes. TaskSanitizer also relies on LLVM's OpenMP runtime (https://github.com/llvm-mirror/openmp) which contains runtime interface for performance and correctness tools (OMPT). OMPT signals events for various OpenMP events -- such as creation of a task, task scheduling, etc. -- and TaskSanitizer implements necessary callbacks for these events for tacking and categorizing program events of each task in the program.

#23

Share Your Videos / Re: German shot-on-5d3 feature film coming to movie theaters.

August 04, 2018, 07:56:29 PMQuote from: Tyronetheterrible on August 04, 2018, 06:52:07 PM

May be slightly off-topic, but does anyone know where one would get an EF-to-PL adapter for use of Zeiss Primes with a 5D? I've seen some adapters online, but none that would support Zeiss Primes.

IIRC, you have to modify the camera, because the space between the PL lens and sensor is less than the EF mount. There's some companies that does this adaptation, last time I checked.

#24

Raw Video Postprocessing / Re: Making a New MLV Processing App! [Windows, Mac and Linux]

August 03, 2018, 06:21:32 PMQuote from: masc on August 03, 2018, 09:38:03 AM

For me it sounds somethings is going wrong, before, in memory.

Yes, it's probably something with the memory and, since it don't happen on unix-like systems, it should be some windows-only expression or something.

Quote

igv_demosaic.c crashes if you use bilinear? That should be impossible.

No, that was a typo, thanks. I meant, bilinear crashs on 435 of video_mlv.c, the same with AMaZE and IGV. Reproduced this at least 6 times, just to be sure.

Quote

I really would like to help finding!!!

What can I do? The Valgrind idea could help finding...

Quote

malloc.h??? This is a standard library...

Yeah, but QTcreator shows it as something related to the crash. As you said, this is something with memory alocation.

Quote

I downloaded your file and can't get the IGV bug visible. What else did you do to have that? I just loaded the file and set to IGV.

Edit: I get something similar when loading (a wrong) darkframe and clicking wildly on any RAW Corrections... but the behavior is undefined, visible only at IGV.

Edit2: Something in the IGV debayer is strange: if I disable the debayer WB correction, the artifacts are gone. But if you load any high contrast clip it looks like sh**. Something is clipping, but I am not really able to debug the sources of IGV... sry.

The IGV bug happens on lastest "master". Just opened the MLV and change to IGV demosaicing.

#25

Reverse Engineering / Re: CMOS/ADTG/Digic register investigation on ISO

August 03, 2018, 05:58:47 AM

I know this is an ongoing research and I'm familiar with the open source model. However:

I'm not putting pressure. I said "I see there's some pressure here".

My point being: if more collaboration from the community is needed to make things faster, then it should be somewhat more accessible for people without programming/math skills to do the tests and share them.

So, currently, you need to change each register, press HalfShutter to activate raw_diag and just then see the changes? This would take too much time, even for a simple test.

Since the brutal force idea seems to be too complicated, can't this be printed inside the menu (e.g "Current WL is xxxx", and so on), using a automatic low resolution silent pic as base for raw_diag calculation everytime a register is changed? Like a daemon in a operating system. DIGIC is not that powerful, but if the pic is lowres enough this might be possible, no?

Quote from: Audionut on August 03, 2018, 05:09:35 AM

"Pressure" to put this feature into mainstream, isn't the same as doing what is needed to put this into mainstream.

I'm not putting pressure. I said "I see there's some pressure here".

My point being: if more collaboration from the community is needed to make things faster, then it should be somewhat more accessible for people without programming/math skills to do the tests and share them.

Quote

Otherwise, use raw_diag to monitor the DR as you change the registers.

So, currently, you need to change each register, press HalfShutter to activate raw_diag and just then see the changes? This would take too much time, even for a simple test.

Since the brutal force idea seems to be too complicated, can't this be printed inside the menu (e.g "Current WL is xxxx", and so on), using a automatic low resolution silent pic as base for raw_diag calculation everytime a register is changed? Like a daemon in a operating system. DIGIC is not that powerful, but if the pic is lowres enough this might be possible, no?